I’ve written before about the claim that there has been no warming for the last 16 years. This is often made by those who are skeptical – or deny – the role of man in climate change. As I mentioned in my earlier post, this is actually because the natural scatter in the temperature anomaly data is such that it is not possible to make a statistically significant statement about the warming trend if one considers too short a time interval.

The data that is normally used for this is the temperature anomaly. The temperature anomaly is the difference between the global surface temperature and some long-term average (typically the average from 1950-1980). This data is typically analysed using Linear Regression. This involves choosing a time interval and then fitting a straight line to the data. The gradient of this line gives the trend (normally in oC per decade) and this analysis also determines the error using the scatter in the anomaly data. What is normally quoted is the 2σ error. The 2σ error tells us that the data suggests that there is a 95% chance that the actual trend lies between (trend + error) and (trend – error). The natural scatter in the temperature anomaly data means that if the time interval considered is too short, the 2σ error can be larger than the gradient of the best fit straight line. This means that we cannot rule out (at a 95% level) that surface temperatures could have decreased in this time interval.

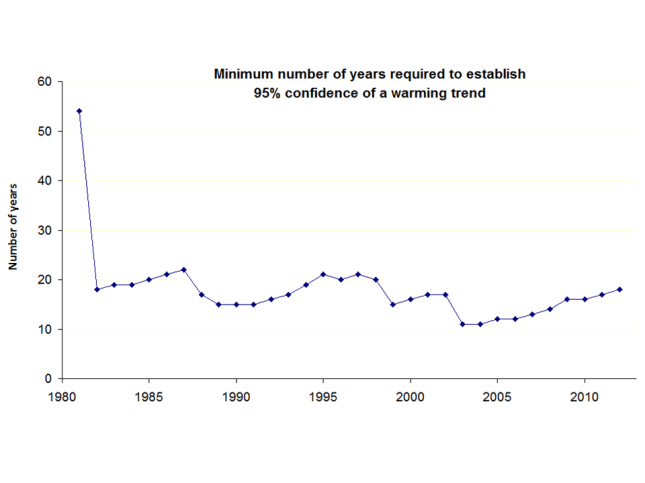

We are interested in knowing if the surface temperatures are rising at a rate of between 0.1 and 0.2 oC per decade. Typically we therefore need to consider time intervals of about 20 years or more if we wish to get a statistically significant result. The actual time interval required does vary with time and the figure below shows, for the last 30 years, the amount of time required for a statistically significant result. This was linked to from a comment on a Guardian article so I don’t know who to credit. Happy to give credit if someone can point put who deserves it. The figure shows that, in 1995, the previous 20 years were needed if a statistically significant result was to be obtained. In 2003, however, just over 10 years was required. Today, we need about 20 years to get a statistically significant result. The point is that at almost any time in the past 30 years, at least 10 years of data – often more – was needed to determine a statistically significant warming trend and yet noone would suggest that there has been no warming since 1980. That there has been no statistically significant warming since 1997 is therefore not really important. It’s perfectly normal and there are plenty of other periods in the last 30 years were 20 years of data was needed before a statistically significant signal was recovered. It just means we need more data. It doesn’t mean that there’s been no warming.

HI totheleft,

Interesting post though I might disagree on the premises; I’d say the choice of a linear regression is not appropiate in this context, thefore, conclusions on how many years we need to claim statistical significance do not follow. But you say ” This data is typically analysed using Linear Regression.” which I find surprising, do you have any link to climatoligists papers doing this?

Good question. I may have been slightly simplistic in my description of the analysis. Typically the trend line can be determined using Linear Regression, but the data are correlated and so standard linear regression doesn’t give the correct error and you need to take that the data is correlated into account. Is that what you were meaning when you said linear regression is not appropriate, or where you meaning something else?

This doesn’t really change what I was getting at here though. Given the scatter/noise in the data, there will be some minimum time interval for which a statistically significant result can be obtained (at the 2σ level). I believe that the figure that I included computes the errors correctly.

Yes you are right; there are auto correlation issues as well as model choice issues.

The thing is that I do not know what exactly is that figure computing. It seems like if it was treating the trend like if it was a random variable itself but I already see problems of autocorrelation again with that approach.

You see, you cannot establish confidence levels or statistical significance until you reach a model which strips information from you data and all is left is noise. I would say linear regressions in this context are spurious.

To say temperatures are going up, or going down we need a model of reference and then we can say “respect that model temperatures go up/down” and, considering NASA models are failing with all their bells and whistles, a linear regression for this problem is likely to be wishful thinking.

But maybe I’m missing some kind of climatologists standard technique or something, do you have a link to the paper where that plot comes from?

I think the figure is a fairly simple illustration. Choose a date (1985 for example) and then go back in steps of one year (for example) until the 2σ error is smaller than the trend. For example, what is the trend and error if you consider only data for 1984 and 1985? What is the trend and error if you consider data for 1983, 1984 and 1985? Carry on increasing the time interval until the error is smaller than the trend. Repeat this for all years of interest.

I appreciate that the result may not strictly be unique. For example, you could find that if you consider data from 1970 to 1985 you get a 2σ error that is smaller than the trend, but if you include 1969, it is suddenly bigger again. Typically, however, the longer the time interval, the smaller the error.

The basic point of the figure is to illustrate that to get a statistically significant trend (at the 2σ level) you typically need to consider a time interval of between 10 and 20 years. Therefore, anyone stating that there has been no warming since 1997 does not understand what statistically significant means. There are many times in the last 30 years when if we had only considered the previous 16 years the warming trend would not have been statistically significant. That, however, did not mean that there had been no warming. Similarly, that the warming trend since 1997 is not statistically significant does not mean that there has been no warming since 1997.

I should add that I’m confused by your mention of models. The temperature anomaly data is normally relative to some long term average, typically 1950-1980. I don’t see why you need to consider models when analysing this data.

I should also add, that I don’t have a link to a paper. As I mentioned in the post the figure was linked to from a Guardian comment. I did, however, use the Skeptical Science trend calculator to check that it made sense (by testing a few years). I also recently wrote my own linear regression code to check the Skeptical Science trend calculator, but couldn’t quite work out how to properly get the auto-correlated errors. If you want to know more about the use of linear regression in calculating warming trends, you could look at the Skeptical Science website.

Hi, thanks for the explanation, I’ll try to clarify what I meant.

I see many problems with that approach but let me focus in just one; you said “…Carry on increasing the time interval until the error is smaller than the trend…” That means that if the trend is zero you will never achieve that (since you cannot have an error smaller than zero) and therefore I can never disprove your claims since you will keep saying you need more data before statistical significance is achieved. Can you see the incoherence in this approach? and this is only one problem.

Temperature anomaly is nothing more that the delta between temperatures, you don’t actually choose the year you want to make those temperatures relative to, the year of reference is the mean resulting from the years you consider in your plot.

You need models to analyze this or any other data, and your conclusions and predictions depend on the validity of your assumptions. The same data with different models would allow us to claim that we have global warming or that we are heading towards another ice age. That is why models need to be validated.

I completely agree – in principle – with the first part of your comment. If the trend were indeed zero, then we could never get a statistically significant warming trend. That’s why in the last paragraph of my post I specifically say “we are interested in knowing if the temperature is riding at a rate of between 0.1 and 0.2 oC per decade”. That at least sets a level that one might regard as significant. We are also interested in whether or not there is a warming trend (i.e. is the trend positive and is the 2σ error smaller than the trend) and so I still think the graph has merit. Given that definition of a warming trend, we can determine – at any instant – how many years are required to determine if the trend is statistically significant or not. Given that there appears always to be a time over which a statistically significant warming trend can be recovered, suggests that warming is indeed taking place. If it wasn’t, the required time interval would be infinite.

The second part of your comment is incorrect. The mean is predefined as the average of some time interval, normally 1950-1980 but it does depend somewhat on the dataset. It is not the mean of the years considered in the plot.

I also disagree with the latter part of your comment. We don’t need models to analyse the data. We have data and can determine the warming trend and the error in that trend. We may need models to interpret what this means for the future, but we don’t need models to do the basic data analysis. The point of this post is simply to illustrate that the statement “there has been no warming since 1997” is wrong. The warming trend since 1997 may not be statistically significant, but that doesn’t mean that there has been no warming. There are many instants in the last 30 years where the warming trend for the previous 16 years were not statistically significant but that are periods over which we now know warming took place.

Ah, I saw your latest post now. Yeah I know about the blog http://www.skepticalscience.com there is also this popular blog wattsupwiththat.com that is like its nemesis…

Oh, I do know about linear regressions, I’m just saying that IMHO they make little sense in this context, that is why I was curious to see how they are used in peer reviewed papers because personal blogs… well, if I don’t trust much peer reviewed papers you can imagine what I think about blogs… and I am writing one myself! 😉

I’m a little confused as to why you think Linear Regression makes little sense in this context. Given the temperature anomaly data, how else would you determine the trend and the error in the trend? Or are you arguing that trying to determine this doesn’t make sense?

There is a link on this Met office site to a paper that seems to use Linear least squares to determine the trend and the errors (see Figures 11 and 12). Haven’t read it in detail, but seems to be using the same basic technique as is used here.

“The second part of your comment is incorrect. The mean is predefined as the average of some time interval, normally 1950-1980 but it does depend somewhat on the dataset. It is not the mean of the years considered in the plot.”

I’ll clarify what I meant; sure you can make the temperatures anomalies relative to anything you want but this is absolute-arbitrary, I imaging that 1950-1980 average has been “chosen” because this way you don’t have to update anomalies databases with every daily data you have, but as time goes by keeping that point will make less and less sense and a new “arbitrary” point will be chosen… Anyhow, my whole point is that 1950-1980 or any other point you choose is _irrelevant_ when it comes to analyze the data. For instance, Mr. Hansen at NASA chose an entirely different point for his papers in 1988 for obvious reasons.

I don’t really understand why it would make any difference what the base temperature is. The linear regression doesn’t depend on this. I won’t get a different trend and different errors if I chose a different base temperature, which is what you say yourself. Maybe you can clarify your comment about Hansen. I tried to download his paper, but couldn’t access it.

“I also disagree with the latter part of your comment. We don’t need models to analyse the data. We have data and can determine the warming trend and the error in that trend.”

A linear trend is still a model, a simple linear model but still a model.

No, I disagree. Linear regression is a method for analysing a data set. It may not always be appropriate (which is maybe what you’re trying to say) but it is not really a model. It seems entirely reasonable to use Linear regression to determine the trend in the temperature anomaly data. That’s all that I’m doing here. I’m not making any claims about what this means in terms of global warming. All I’m trying to show is that there is typically a minimum time interval required in order for the trend to be larger than the 2σ errors. It’s a fairly simple thing and I’m kind of surprised that we’re having this discussion to be honest. I didn’t really think that this would be contentious.

“I’m a little confused as to why you think Linear Regression makes little sense in this context. Given the temperature anomaly data, how else would you determine the trend and the error in the trend? Or are you arguing that trying to determine this doesn’t make sense?”

That’s exactly what I mean… but let me check the paper at Met office and I’ll be back to you.

Back… 🙂 Thanks for the paper.

But actually the paper has nothing to do with what we are discussing. The authors simple propose a refinement to the way raw data temperatures is analyzed for a better descriptions of global warming.

They use linear trends to compare the difference between *treatments* (this is what you see in fig 11 and 12) and in no way they use the linear regressions to make any prediction about the evolution of global warming.

I was a bit worried though when I began reading, ha ha 🙂 but to be honest I will be awed if you find a peer review paper using linear regressions to make statements about how temperatures evolve.

Let’s go back a step here. It is regularly claimed that there has been no warming since 1997. This is because the Met Office (and others) presented their temperature anomaly data and produced a trend and 2σ error from 1997 and it turned out that the 2σ error was bigger than the trend. Many then used this to claim that there has therefore been no warming. Do you agree with this?

You seem to think that I’m trying here to use linear regression to make predictions about global warming. I am not. I am simply trying to illustrate that if one is using the temperature anomaly data and if one wants to determine if there is a warming trend, one will need to consider typically more than 10 years worth of data if this trend is to be statistically significant (at the 2σ level). This is a fairly simple thing. I think you’re reading far too much into what this post is about.

Hold on, I think you’ve misread the paper. The different lines are for the different data sets. They’ve plotted linear trends (plus errors) for the 4 different data sets. It’s not different treatments. There is no real difference between what they’ve done and what’s been done here. The plot here was simply done using a single data set.

I think you misunderstand what I’m trying to say in this post. I’m not trying to use linear regression to make statements about how temperatures evolve. I’m simply trying to illustrate how the error in the trend will typically decrease as one increases the time interval considered. That is really all. Nice and simple.

You say “Hold on, I think you’ve misread the paper. The different lines are for the different data sets….”

The different datasets are the result of different treatments, I mean, historical temperatures from London, Madrid, Paris…. are the same for everyone, so do for any other meteorological stations in the world which they all have access to. Since there are many different ways to treat nuances, when they do the outcome is different datasets from the *same* raw data, thus, they are actually comparing the treatments.

This is clearly stated in the abstract: “Recent developments in observational near-surface air temperature and sea-surface temperature analyses are combined to produce HadCRUT4, **a new data set** of global and regional temperature evolution from 1850 to the present”

Also, they make no claims on how global warming is evolving, their interest focus on the HadCRUT4 dataset and how in compares to the other datasets resulted from different **treatments**.

Sure, I agree but I don’t see how this differs to what I’m doing here. There is a dataset. It goes back 160 years. I can determine the trend and error at any time and over any time interval in those datasets. That’s all that that I’ve really done. I’m not actually making any claims about how global temperatures are evolving. All I’m doing is showing that there are many instances in the last 30 years in which you would need to consider more than 15 years worth of data (from the dataset) if you were to get a trend that was positive and the magnitude of which exceeded the 2σ error. That is all.

I’m actually starting to get confused about what we’re discussing here. I think I’ve defined things quite clearly and have quite clearly explained what I’m trying to illustrate. You asked if there was a peer-reviewed paper that used linear fitting to determine trends in temperature anomaly data. I found you one and you now think it’s somehow not relevant.

“I think you misunderstand what I’m trying to say in this post. I’m not trying to use linear regression to make statements about how temperatures evolve. I’m simply trying to illustrate how the error in the trend will typically decrease as one increases the time interval considered. That is really all. Nice and simple.”

Oh, yeah, I do appreciate you focus on the trend and its estimation error, I’m just saying that this makes no much sense to me… but let’s agree to disagree on this one 😉

That’s actually fine. Then you should agree that the statement “there has been no warming since 1997” is wrong. It is based on linear analysis of temperature anomaly data. That is really all that I’m trying to get across here. All I was trying to do was to use the same analysis that is the basis of that claim. I think it’s wrong because it misinterprets the term “statistically significant”. You think it’s wrong because the entire analysis doesn’t make sense. The conclusions are, however, consistent.

I’ve got to say that I still don’t really understand why this analysis doesn’t make sense to you. It’s a pretty standard technique and gives information about the trend in the data. I completely accept that one should be careful in how one interprets that, but there’s nothing fundamentally wrong with using linear regression.

“Then you should agree that the statement “there has been no warming since 1997″ is wrong.”

It is not wrong, it is a fact (Anomalies from BEST):

date 1997 11 temp 0.510

date 2011 11 temp 0.471

Let’s see if I get you right. Since you say people are claiming there is no global warming in the last 15 years allegedly based on linear regressions, you are using trends estimations to estate that, unless its value is bigger than the 2 sigma error no statistical significance can be claimed on how global warming is evolving, you claim we need more time to achieve such significance and, therefore, this people is wrong by making those claims. Am I correct?

Well, first, I don’t know if they make those claims based on linear trends but check this comment from Dr. Phil Jones (who is anything but a skeptical) that I got after hopping from your post to other post and eventually to the dailymail:

“He further [Professor Phil Jones] admitted that in the last 15 years there had been no ‘statistically significant’ warming, although he argued this was a blip rather than the long-term trend.”

Read more: http://www.dailymail.co.uk/news/article-1250872/Climategate-U-turn-Astonishment-scientist-centre-global-warming-email-row-admits-data-organised.htm

Probably you don’t doubt Dr. Phil Jones, though unfortunately the article does not say how he calculated his ‘statistical significance’, but it contradicts the linear approach you show here. That is why I was asking you for peer reviewed papers on **this particular** subject using linear regressions the way you do.

Okay, but this is the point I’m trying to make. There is a difference between “there has been no statistically significant warming” and “there has been no warming”.

I must firstly say that I’m surprised that you think it’s okay to present two data points (one in 1997 and one in 2011) to claim that there has been no warming.

I’m going to try and explain this again, to see if we can reach some kind of agreement. If you use Linear regression to determine the trend in the temperature anomaly data and if you only consider the data since 1997, you discover that the trend is positive, but that the magnitude of the trend is smaller than the 2σ error. This means that we cannot state (with 95% confidence) that warming has occurred since 1997. We can also not state (with 95% confidence) that it hasn’t.

If, for example, we consider the HADCRUT4 data set, using Linear regression gives that since 1997, the trend has been 0.046 +- 0.124 oC per decade. Given that these are 2σ errors, this means that we can say with 95% confidence that the trend has been between -0.078 and 0.17 oC per decade. That, as far as I’m concerned, is irrefutable. I’ve defined the data set. I’ve defined the method. I’ve defined the level of error that we consider (2 σ). The data is telling us that we can’t claim that warming has taken place and we can’t rule out that it hasn’t.

However, many people claim that the data is telling us that warming has not taken place. My claim is that this statement is wrong. You quote Phil Jones. However, he didn’t say warming hasn’t occurred. He says that there has been no “statistically significant” warming. I agree, with this statement. It, however, isn’t the same as “no warming has taken place”.

What I was pointing out in the above post is that if you were to use Linear Regression (which is used) and if you were to consider 2σ errors, there are many instances in the last 30 years where a time interval in excess of 15 years was required before a statistically significant warming trend was seen in the temperature anomaly data. It is therefore not particularly surprising that the no “statistically significant” warming trend can be detected in the data since 1997.

Let me also clarify something else. I agree that the statement statistically significant is not necessarily well defined and does imply some pre-defined sense of what we regard as significant. If we were concerned about warming trends of 0.5 degrees C per decade or higher, then the current data (which suggest that since 1997 it has been between -0.08 and 0.17 degrees C per decade) would rule out warming at that level. However, we are concerned (in my view at least) about warming trends of between 0.1 and 0.2 degrees C per decade. The data since 1997 does not rule out this level of warming and we will only be able to establish a more accurate warming trend by considering more data.

I still find it odd that we’re still discussing this. I don’t really see the issue. All that I’m trying to point out is that you can’t use the data since 1997 to claim that warming has not taken place. You can, similarly, not use it to claim that it has. This shouldn’t really be a matter for much discussion. It’s a consequence of the analysis. It’s not really matter of opinion (or at least I don’t think it is).

By the way, here’s anomaly data from HADCRUT4

1997 01 0.206

2012 08 0.532

That would suggest warming of 0.21 oC per decade.

Just to make it clear, I have chosen the smallest I could find in 1997 and the largest in 2012. It just illustrates that you can’t really estimate the warming trend by only considering 2 data points.

“By the way, here’s anomaly data from HADCRUT4”

Don’t be naughty, you have to choose the same month 😉

Why would I choose the same month. I wasn’t trying to show that your numbers were correct, I was trying to show that I could get a “warming trend” by considering data over a similar time interval. Choosing the same month would defeat the object of what I was trying to illustrate 🙂

This might be one this cases where the problem is in the wording; by there has no been warming I would say the average person means “temperatures are not higher than 1997” so the two points comparison “kinda” holds (if you choose the same month), but for you it means “the trend is below 0.1 degrees with a two sigma confidence” which is something that still has not happened.

On the other hand, the 0.1 is an arbitrary choice specially considering that before 1997 the rate was around 0.3 and that the increased was supposed to be *exponential*, so it is also possible that deniers pick that 0.3 instead the 0.1 to claim “significance”.

Nonetheless, using trends and linear regressions for this kind of discussion still makes no sense to me; even if you had significance one way or the other it would still means nothing in the general context of global warming. IMHO these discussions only make sense in the context of an appropriate physical model. Everything else seems to me like wishful thinking. It reminds me at those professional brokers using charting to asses the stock market… Excellent way to lose your money.

Anyhow, nice chat 🙂

Two final comments.

I think that a statistically significant warming trend at the 2σ level is well-defined. It is, the trend is positive and greater in magnitude than the error. I don’t see this as all that contentious.

The other comment is, I don’t believe that global temperatures rose at 0.3 degrees per decade prior to 1997. Maybe there was some really short time interval over which the trend was 0.3, but the error would have been large.

I should maybe add, that I tend – in some sense – to agree with you about the use of models. I have argued before (and I think I made a comment on your blog about this too), that relying primarily on global surface temperatures misses – to a certain extent – the fundamentals of global warming. Global warming is fundamentally an energy imbalance. If we are undergoing global warming, we are receiving more energy from the Sun than we are losing back into space. To reach equilibrium we would expect the surface temperatures to rise. However, just because the surface temperatures are not rising (or not rising fast) does not mean we are not undergoing global warming. There are many ways in which this energy can be absorbed and it could take quite some time for the surface temperatures to rise.

So, if the temperatures since 1997 have nor risen and there is no energy imbalance, then we are not undergoing global warming. That is fairly fundamental conservation of energy. However, satellite data shows that there is indeed an energy imbalance, and so – whatever the global surface temperatures are doing – we are undergoing global warming. That should be irrefutable. We could consider whether the satellite data is correct or not, but we shouldn’t be debating whether or not an energy imbalance implies global warming or not.

Pingback: Global warming – the basics | To the left of centre